Introduction of General-level Scoring

General-Level is a sophisticated evaluation framework which is introduced to more accurately position and assess the capabilities of current MLLM generalists, charting a path toward authentic multimodal AGI.

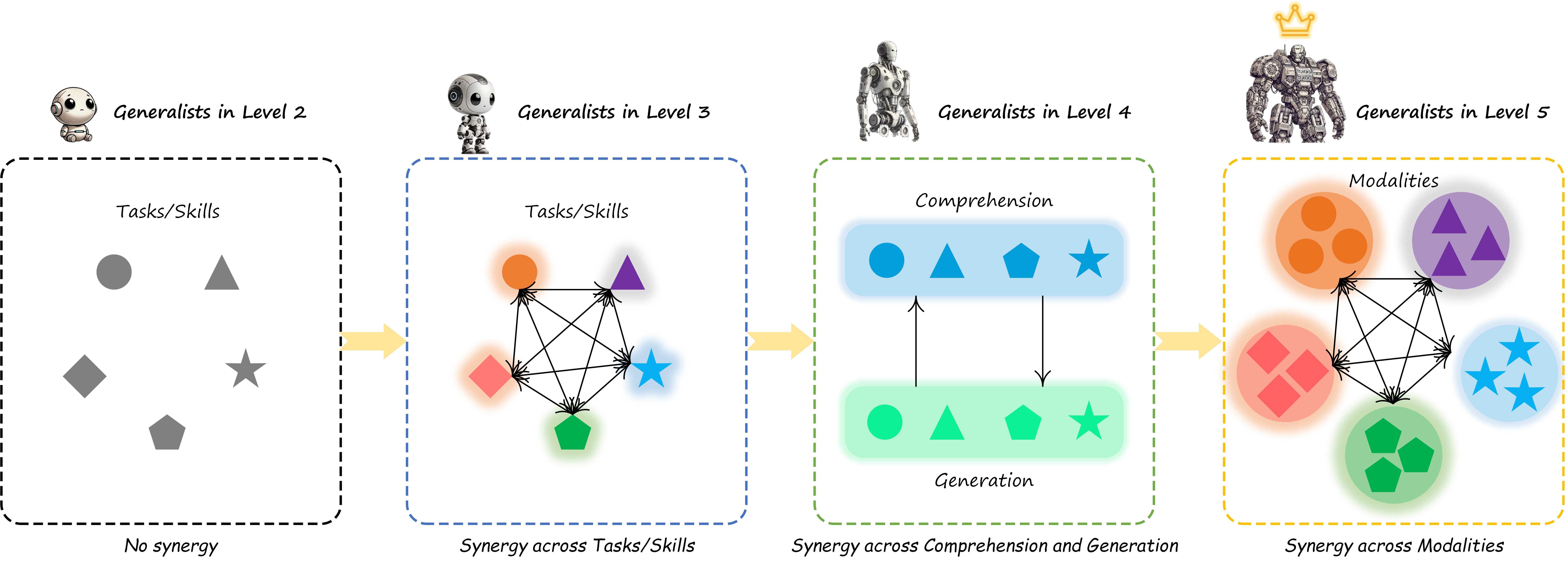

Drawing inspiration from the tiered classification mechanism in the automotive industry for autonomous vehicles, General-Level defines five principal levels of model performance and generality.

Central to the framework is the synergy ability as the evaluative criterion, categorizing capabilities based on whether generalists preserve synergy in and across multimodal comprehension and generation, as well as cross-modal interactions.

From the lowest to the highest level, the scope of synergy ability required progressively escalates—from single tasks or modalities to total synergy.

As a generalist strives to advance to a higher level, it must demonstrate significant enhancements in its synergy capabilities.

Defining Levels Centered on Synergy

General-Level introduces a 5-level taxonomy of multimodal generalists, which evaluates generalists based on the levels and strengths of the synergy they preserve.

Specifically, we define three levels and scopes of synergy, ranked from low to high: task-task, comprehension-generation, and modality-modality.

Next, we give the scoring specification of General-Level:

Level-1 Specialists

Various current models, each fine-tuned on a specific task or dataset of specific modalities, are task-specific players (i.e., SoTA specialists). This includes various learning tasks, such as linguistic/visual recognition, classification, generation, segmentation, grounding, inpainting, and more.

- Level-1 Scoring

For each task in the benchmark (\(i\)-th task), the current SoTA specialist’s score is recorded as:

\[\sigma_i^{sota}\]

- Level-1 Example Specialists

Level-2 Generalists of Unified Comprehension and/or Generation

Models are task-unified players, e.g., MLLMs, capable of supporting different modalities and tasks. Such MLLMs can integrate various models through existing encoding and decoding technologies to achieve aggregation and unification of various modalities and tasks (such as comprehension and generation tasks).

- Level-2 Scoring

The average score between Comprehension and Generation tasks (i.e., across all tasks) represents the score at this level. A model that can score non-zero on the data is considered capable of supporting that task. The more supported tasks and the higher the scores, the higher its overall score:

\[S_2 = \frac{1}{2} \left( \frac{1}{M} \sum^{M}_{i=1} \sigma_{i}^{C} + \frac{1}{N} \sum^{N}_{j=1} \sigma_{i}^{G} \right)\]

- Level-2 Example Generalists

Level-3 Generalists with Synergy in Comprehension and/or Generation

Models are task-unified players, and synergy is in Comprehension and/or Generation. MLLMs enhance several tasks’ performance beyond corresponding SoTA scores through joint learning across multiple tasks due to the synergy effect.

- Level-3 Scoring

Assign a mask weight of 0 or 1 to each task; mask=1 only if the corresponding score (\(\sigma_i^{C}\) or \(\sigma_i^{G}\)) exceeds the SoTA specialist’s score, otherwise mask=0. Then, calculate the average score between \(S_C\) and \(S_G\). The more tasks to surpass the SoTA specialist, the higher the \(S_3\):

\[\begin{split}S_3 &= \frac{1}{2} \left( S_G + S_C \right) \,, \text{where} \\ S_C &= \frac{1}{M} \sum_{i=1}^M \begin{cases} \sigma^{C}_i & \text{if } \sigma_i^{C} \geq \sigma^{C}_{{sota}} \\ 0 & \text{otherwise} \end{cases} \\ S_G &= \frac{1}{N} \sum_{j=1}^N \begin{cases} \sigma^{G}_j & \text{if } \sigma^{G}_j \geq \sigma^{G}_{{sota}} \\ 0 & \text{otherwise} \end{cases}\end{split}\]

- Level-3 Example Generalists

Level-4 Generalists with synergy across Comprehension and Generation

Models are task-unified players, and synergy is across Comprehension and Generation.

- Level-4 Scoring

Calculate the harmonic mean between Comprehension and Generation scores. The stronger synergy a model has between Comprehension and Generation tasks, the higher the score:

\[S_4 = \frac{2 S_C S_G}{S_C + S_G}\]

- Level-4 Example Generalists

Level-5 Generalists with total synergy across Comprehension, Generation and Language

Models are task-unified players, preserving the synergy effect across Comprehension, Generation, and Language. In other words, the model not only achieves cross-modality synergy between Comprehension and Generation groups but also further realizes synergy with language. The Language intelligence can enhance multimodal intelligence and vice versa; understanding multimodal information can also aid in understanding language..

- Level-5 Scoring

Calculate the model’s average score exceeding SoTA NLP specialists on NLP benchmark data; normalize it to a \([0,1]\) weight, and multiply it by the score from level-4 as the level-5 score:

\[\begin{split}S_{5} &= S_{4} \times w_{L} \,, \text{where} \\ w_L &= \frac{S_L}{S_{\text{total}}} \,, \text{where} \\ S_L &= \frac{1}{T} \sum_{k=1}^T \begin{cases} \sigma_k & \text{if } \sigma_k \geq \sigma_{\text{sota}} \\ 0 & \text{otherwise} \end{cases}\end{split}\]

- Level-5 Example Generalists

Attention

None found yet (Let’s wait for multimodal ChatGPT moment!)